I'm looking for feedback on my overall architecture design, and also MQTT configuration specifically.

I already tested most of the details, but any suggestions for improvement or considerations are welcome:

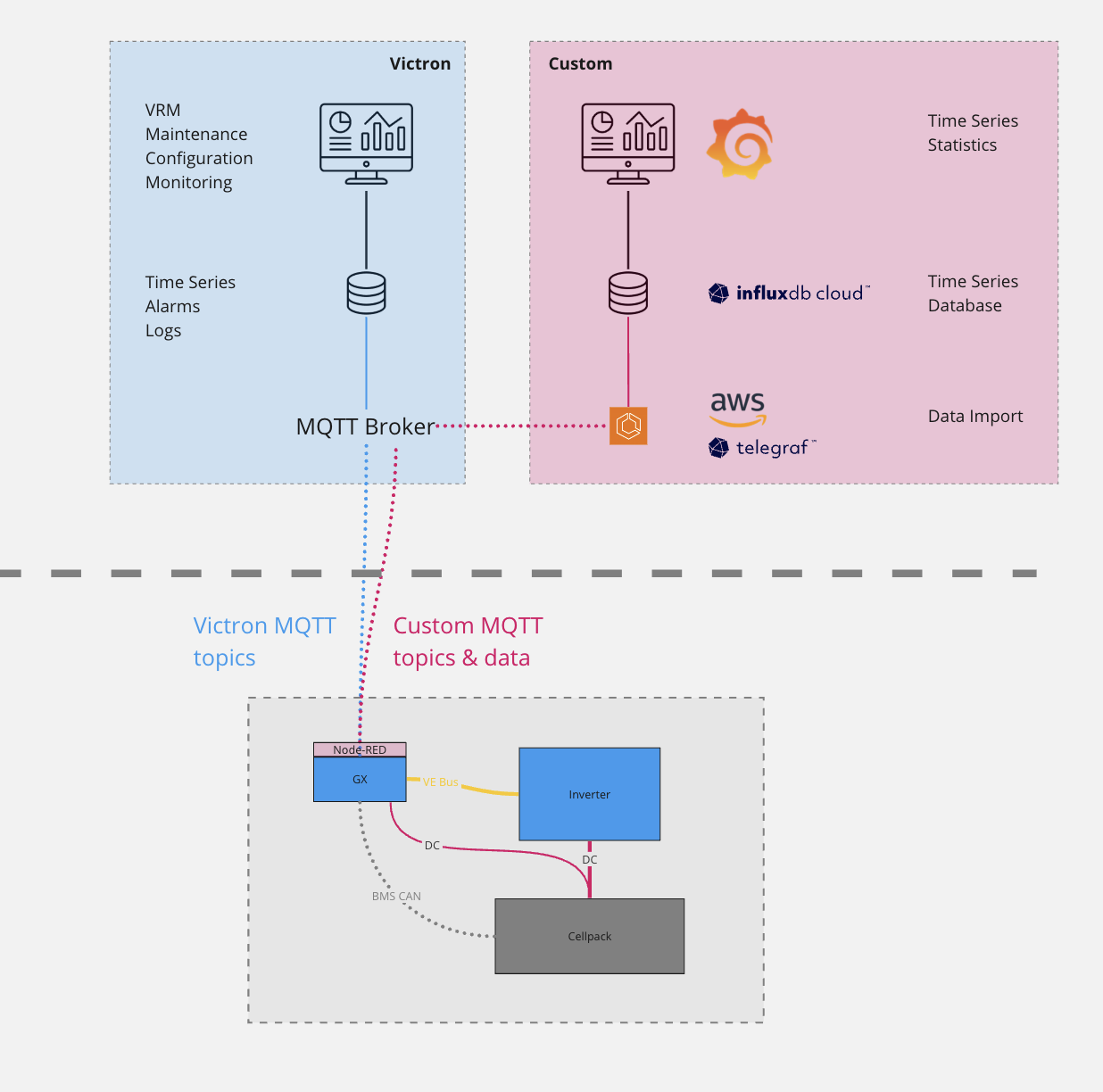

I own several Cerbo GX for a ESS installations. My custom logic on each Cerbo for controlling the Multiplus inverter runs on Node-RED.

On the cloud, I have a time-series database where I want to store long-term, seamless data of all sites. Similar to what Victron shows in the VRM portal, like charge power, voltage levels and so on. But I also want to store time-series data from my own Node-RED logic. And I want to keep data persistently long-term without loosing or aggregating data, so not loosing messages is important.

My current implementation for storing data is this: I let the cloud server have a MQTT client which subscribes to several specific topics on Victron's MQTT brokers (mqttXX.victronenergy.com). When VenusOS or my Node-RED code publishes a message locally through MQTT, it gets forwarded to Victron's MQTT brokers which then deliver the message to my data collector.

My concerns are now if I'm able to configure the seamless QoS levels (1 or 2) and potential issues with disconnects, so that a disconnect between the Cerbo with Victron, or Victron and my server doesn't harm the gapless time-series.

Any feedback on this approach? I also open to suggestions for alternative solutions or best practices that might address my concerns.