The hysteresis (if applicable) is 1%. Yet I didn’t meant to change the hysteresis. The hysteresis currently only has an effect, when switching from idle to charge or discharge cases. In case of a consecutive charging it is set to “0” to allow the system to reach target-soc “spot on”. (And After reaching the target, hysteresis is set to 1 again, to prevent immediate charges, if the actual soc drops a bit during idle for example)

I’ve thought about this past hours, and the +/-0.5 example I provided is probably not the best to understand without explaining that a bit more:

There are two types of systems: Those that report SoC Values with decimal-digits, so with a 0.1 precision and the second type that only reports whole integers. (That’s depending on the BMS manufacturer)

For the integer-only type, having a decimal target-soc would introduce a number of “issues”:

- target socs to enter idle in the range of .1 - .9 would be impossible, the system would always see a charge or discharge requirement first because it can only be “bellow” or “above” that target soc.

- When discharging, having a target-soc like “30.9” would always require such a system to discharged down to the next whole integer (31->30) before the goal would be achieved, therefore already almost spending the hysteresis value to just reach that target.

For the decimal-type of system, these issues wont exist, so it would be kinda possible to say “Well, then let’s adjust the target soc to the system type and we are good” - but decimal-based systems wouldn’t see any “gain” of doing this. What is the underlaying assumption, that a system that does not reach “48.0 %” precisely enough would operate better, if the target soc would be “47.7%”?

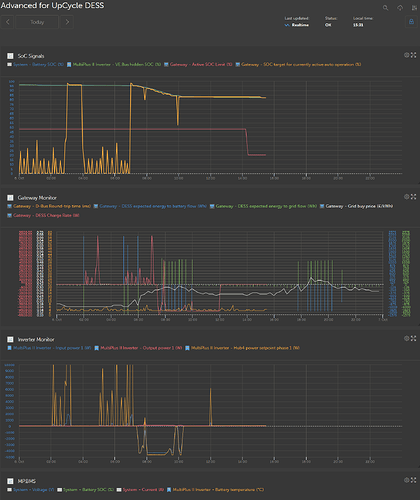

To think about this “question”, I may add another detail about the mentioned “charge-rate-correction”: Whenever the local systems SoC value “changes”, the system is calculating an updated rate that is required to reach target soc by the end of the window. Because the moment of a reported SOC-Change is the moment the missing amount of energy is known “precisely”.

So, that means, a integer-based system receiving a target soc of +3% will undergo a total of 3 charge-rate corrections within that window (initial rate + corrections on +1 and +2), while a decimal-soc-based system would undergo 30 charge rate corrections. In other words, a system having a decimal soc reported would/should/could already be way more precise on reaching a target-soc value “spot on” - no matter if that is “50.0” or “49.7”.

Just compare the last step at a target soc of 50: The integer-based system would have it’s last correction at 49%, then eventually missing the 50% by some seconds. A decimal-based system would have 9 more corrections to go and the last one done at 49.9% is such a minor step, it shouldn’t cause any huge missmatch on reaching any soc level just fine. If it still does - then there might be an all different issue involved.

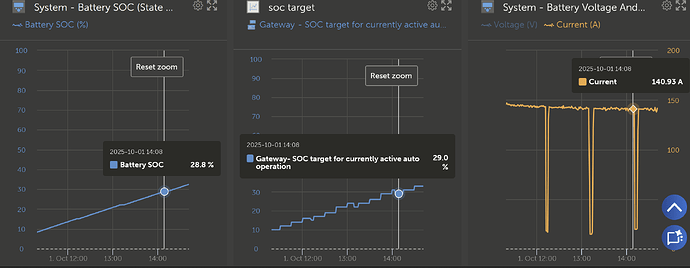

So, therefire I also will send you a PN, if you could give me your VRM id, I may have a look at your system and eventually get a better idea on what’s not working ideal and what’s eventually the root-cause of that.

Don’t get me wrong, I’m not a CR-Refuser, I’m the kind of guy who gladly takes user-requests or proposals into internal chats for discussion - but If I can’t see an advantage on it, i’ll have a hard time justifying why it should be changed/improved. If I can outline a problem and somewhat proof that this may solve it, acceptance-probability on that is an all different story

(Yet, just me not seeing a benefit on that doesn’t stop you to propose that ofc.)

![]()